BRFv4 - API Reference

Overview

The BRFv4 API is the same on all platforms. Everything described on this page can be applied to Javascript, Actionscript 3 or C++.

Before you read this API, please take a look at what you can do with BRFv4, especially what results to expect from

face detection and face tracking (link: What can I do with it?).

There are two main classes: BRFManager and BRFFace.

Going forward "roi" means "region of interest", the rectangular area of an image an algorithm works in.

BRFManager

(control flow)

init( Rectangle imageDataSize, Rectangle imageRoi, String appId ) : void

(face detection)

setFaceDetectionParams( int minWidth, int maxWidth, int stepSize, int minMergeNeighbors ) : void

setFaceDetectionRoi( Rectangle roi ) : void

(face tracking)

(point tracking - optical flow)

setOpticalFlowParams( int patchSize, int numLevels, int numIterations, double error ) : void

addOpticalFlowPoints( Vector<Point> pointArray ) : void

getOpticalFlowCheckPointsValidBeforeTracking() : Boolean

setOpticalFlowCheckPointsValidBeforeTracking( Boolean value ) : void

BRFFace

BRFMode

BRFState

BRFManager

BRFManager controls everything. First you need to call:

init( ... )

Based on the image size the SDK sets reasonable default parameters to start tracking a single face,

but you can change any parameter once BRFv4 is ready. Keep in mind that most parameters are pixel values

and you need to adjust parameters based on the image data size that you put in, eg.

setFaceDetectionParams( int minWidth, int maxWidth, int stepSize, int minMergeNeighbors ) : void

If you use a 640x480 camera resolution the trackable face size is 480 pixel at maximum.

A reasonable minimum would be 25% of

that max size, which is 120 pixel.

For a 1920x1080 resolution the trackable face size is 1080 pixel and 25% of that is 270 pixel.

That's why the examples use percentages for all areas and sizes. This makes sure that the camera resolution can easily be changed.

Once everything is set up you need to update the image data before calling:

update( ... )

BRFv4 tries to detect a face. If a face was detected BRFv4 immediately starts the face tracking based on it.

Face Detection operates globally. That's why BRFManager and not BRFFace got the result functions:

getAllDetectedFaces() : Vector<Rectangle>

getMergedDetectedFaces() : Vector<Rectangle>

The detailed face tracking data can be retrieved by calling:

getFaces() : Vector<BRFFace>

which gives you a list of BRFFaces with a length based on your setting of:

setNumFacesToTrack( int numFaces )

And that's all about it. Init, set parameters, and continuously update.

For more information about the implementation details for your specific platform, see the sample packages.

(control flow)

init( Rectangle imageDataSize, Rectangle imageRoi, String appId ) : void

This function is the starting point.

It tells BRFv4 what image data size it should expect, on which area to operate and what your appId is.

If you want to switch the image data and thus need to change the image data size, just call init() again

to reinit BRFv4.

Don't create a new BRFManager! Reuse a single instance.

When you first call init() a license check is performed. It's a single server call. If it fails in the trial version

BRFv4 will stop working after about 1 to 2 minutes. The commercial version is fail safe,

which means that even if no connection to the license server can be established, BRFv4 will still work.

Apart from that BRFv4 works fully on the client side.

- imageDataSize (Rectangle)

- The size of the image data you put in.

For a 640x480 camera resolution you need an imageDataSize of Rectangle(0, 0, 640, 480) or for a mobile portrait camera resolutions you will need eg. Rectangle(0, 0, 480, 640). - imageRoi (Rectangle)

- The area within imageDataSize that you want to analyse. Usually you want to analyse the whole image, but

sometimes you only need a part of it and restrict the area.

For a 640x480 camera resolution you will want to set the roi at the same size as the imageDataSize: Rectangle(0, 0, 640, 480). But maybe you want to restrict it to the center, eg. Rectangle(80, 0, 480, 480), this would restrict the analysed area from xStart = 80 to xEnd = 80 + 480 = 560. - appId (String)

- Choose your own appId (minimum length is 8 characters). In our examples we usually put something in like "com.tastenkunst.brfv4.as3.examples". Create your own appId using your reversed company domain.

update( Bitmap imageData ) : void

This function does the tracking job. It operates in either of the three BRFModes.

In C++ implementations, eg. OpenCV (camera handling) or iOS, you need to update the imageData on your own before calling update.

All other platforms have their known image containers and BRFv4 is already set up to deal with those.

The results can be retrieved after calling update() as arrays of Rectangle or BRFFace:

getAllDetectedFaces() : Vector<Rectangle>

getMergedDetectedFaces() : Vector<Rectangle>

getFaces() : Vector<BRFFace>

- imageData (a platform dependent image data)

- This is platform dependent. In C++ implementations, eg. OpenCV (camera handling) or iOS, you need to update the imageData on your own before calling update. All other platforms have their known image containers and BRFv4 is already set up to deal with those, eg. Android(Java): Bitmap, AS3: BitmapData etc.

reset() : void

All face trackers reset and start over by performing face detection. Also all point tracking points are removed.

↑ topsetMode(String mode) : void

Sets one of the three modes from BRFMode:

- BRFMode.FACE_DETECTION

- This mode will only perform the face detection part and skip the detailed analysis.

- BRFMode.FACE_TRACKING

- This is the usual mode for face tracking. It performs face detection to find a face and then tracks the facial features in detail.

- BRFMode.POINT_TRACKING

- This mode will skip on face detection and tracking and only perform point tracking. You can perform face tracking and point tracking simultaneously by choosing FACE_TRACKING as mode.

- mode (String)

- either BRFMode.FACE_DETECTION, BRFMode.FACE_TRACKING or BRFMode.POINT_TRACKING

(face detection)

setFaceDetectionParams( int minWidth, int maxWidth, int stepSize, int minMergeNeighbors ) : void

Internally BRFv4 uses a DYNx480 (landscape) or 480xDYN (portrait) image for it's analysis. So 480px is the

base size that every other input size compares to (eg. 1280x720 -> 854x480).

The minimum detectable face size for the following resolutions are:

- 640 x 480: 24px ( 480 / 480 = 1.00 * 24 = 24) (base)

- 1280 x 720: 36px ( 720 / 480 = 1.50 * 24 = 36)

- 1920 x 1080: 54px (1080 / 480 = 2.25 * 24 = 54)

Also: faces (blue) are only detected at step sizes multiple of 12.

So the actual face detection layers (sizes) are:

- 640 x 480: 24, 36, 48, 60, 72, ..., 456, 468, 480

- 1280 x 720: 36, 54, 72, 90, 108, ..., 684, 702, 720

- 1920 x 1080: 54, 81, 108, 135, 162, ..., 1026, 1053, 1080

Detected faces (blue) get merged (yellow) if they are

+ roughly placed in the same location,

+ roughly the same size and

+ have at least minMergeNeighbors of other rectangle in the same spot.

- minWidth (int)

- The starting/minimum face size (in pixel) that the algorithm is looking for.

- maxWidth (int)

- The end/maximum face size (in pixel) that the algorithm is looking for.

- stepSize (int)

- Minimum step size is 12, must be a multiple of 12 (12, 24, 36 etc.). By increasing the stepSize you skip detection layers and make the face detection less accurate, but perform faster.

- minMergeNeighbors (int)

- Number of candidates (blue) necessary in one spot to merge all of them into a "detected face" (yellow).

setFaceDetectionRoi( Rectangle roi ) : void

Sets the region of interest, the area within the image data where the face detection shall do its work.

- roi (Rectangle)

- A rectangular area within the image.

getAllDetectedFaces( ) : Vector<Rectangle>

Returns all face candidates (blue) that were detected during the last update.

↑ topgetMergedDetectedFaces( ) : Vector<Rectangle>

Returns all actual merged/detected faces (yellow) of the last update call.

↑ top(face tracking)

setNumFacesToTrack( int numFaces ) : void

By default BRFv4 sets the number of faces to track to 1 (single face tracking). To track more than one face increase this value. Be aware that increasing the number of faces to track will hurt the performance with every face you add.

- numFaces (int)

- The number of faces you want to track, usually 1.

setFaceTrackingStartParams( double minWidth, double maxWidth, double rx, double ry, double rz ) : void

By setting these parameters you can control the conditions for picking up a face. Restrict the distance/range to

the camera by setting minWidth and maxWidth. Smaller values mean more distance to the camera. Restricting

the starting angles may help to create a consistent user experience.

Keep the minWidth and maxWidth values about the same as for the params set in setFaceDetectionParams();

- minWidth (double)

- A pixel based value. The minimum size a face must have to be picked up.

- maxWidth (double)

- A pixel based value. The maximum size a face can have to be picked up.

- rx (double)

- A degree angle. Rotation around the X axis (pitch, turning head up/down)

- ry (double)

- A degree angle. Rotation around the Y axis (yaw, turning head to the left/right)

- rz (double)

- A degree angle. Rotation around the Z axis (roll, tilt head to the left/right)

setFaceTrackingResetParams( double minWidth, double maxWidth, double rx, double ry, double rz ) : void

By setting these parameters you can control the conditions for resetting the tracking of a face. If a face gets smaller than minWidth or larger than maxWidth or if the head turns more than rx, ry, or rz, the tracking will reset.

- minWidth (double)

- A pixel based value. Faces smaller than this value will trigger a reset of the tracking.

- maxWidth (double)

- A pixel based value. Faces larger than this value will trigger a reset of the tracking.

- rx (double)

- A degree angle. Rotation around the X axis (pitch, turning head up/down).

- ry (double)

- A degree angle. Rotation around the Y axis (yaw, turning head to the left/right)

- rz (double)

- A degree angle. Rotation around the Z axis (roll, tilt head to the left/right)

getFaces( ) : Vector<BRFFace>

Returns the list of currently tracked faces. Please see BRFFace for details.

↑ top(point tracking)

setOpticalFlowParams( int patchSize, int numLevels, int numIterations, double error ) : void

Optical Flow is a point tracking algorithm. It is used in BRFv4 to stabilize fast movements. You can perform this point tracking either standalone by setting BRFMode.POINT_TRACKING or in parallel by setting either one of the other two modes.

- patchSize (int)

- This is the area around a point that is used to compare to the new image. Has to be an odd number. Larger values will decrease performance. Lower values will decrease accuracy. 21 (10 pixels on in each direction) is a good default value.

- numLevels (int)

- The number of pyramid levels to use. Either 1, 2, 3 or 4 (default). Lower values will decrease accuracy.

- numIterations (int)

- The maximum number of iterations to perform the search.

- error (double)

- Usually smaller than 0.0001. Higher values will decrease accuracy.

addOpticalFlowPoints( Vector<Point> pointArray ) : void

Adds new points to track. This should be done synchronously right before calling update(). If you call this method in the middle of an update call, eg. by doing so in a click/touch handler, the tracking might get confused.

- pointArray (Vector<Point>)

- A list of points to add to the optical flow tracking.

getOpticalFlowCheckPointsValidBeforeTracking() : Boolean

Returns whether BRF will check the validity of points.

↑ topsetOpticalFlowCheckPointsValidBeforeTracking( Boolean value ) : void

Sets whether BRF will check the validity of points. In continuous point tracking there will be points that fail to be tracked. Their state will be false after an update call. As a developer you can react on failed points by checking the state. If you wish that BRF removes failed points right before the next tracking, set this value to true. If you wish to handle the removal or resetting of points yourself, set this value to false.

- value (Boolean)

- true or false

getOpticalFlowPointStates() : Vector<Boolean>

Returns the states of all currently tracked points.

↑ topBRFFace

BRFFace holds all information about a tracked face. BRFv4 is now able to track more than one face, so every

BRFFace has it's individual state. These states are being set automatically by BRFv4 depending on what it

currently does.

Compared to BRFv3 there was a change on how the vertices are laid out.

The origin for the 3D transformation (scale, translationX, translationY, rotationX, rotationY, rotationZ)

is right behind vertex index 27 (top of the nose). So if you want to put a 3D object or PNG file on top of a

tracked face you know exactly where to position it.

state (String)

The state this face is currently in. Either one of the following BRFStates:

- BRFState.RESET

- This face was reset because of a call to reset or because it exceeded the reset parameters.

- BRFState.FACE_DETECTION

- BRFv4 is looking for a face, still nothing found.

- BRFState.FACE_TRACKING_START

- BRFv4 found a face and tries to align the face tracking to the identified face. In this state the candide3 model and 3D position might not be correct yet.

- BRFState.FACE_TRACKING

- BRFv4 aligned the face and is now tracking it.

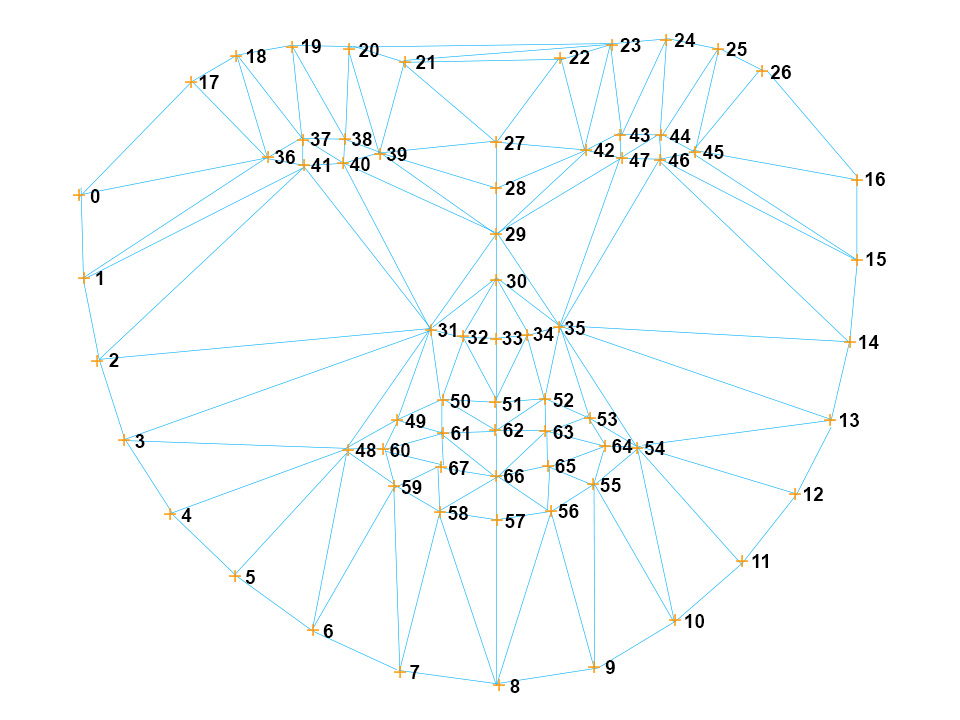

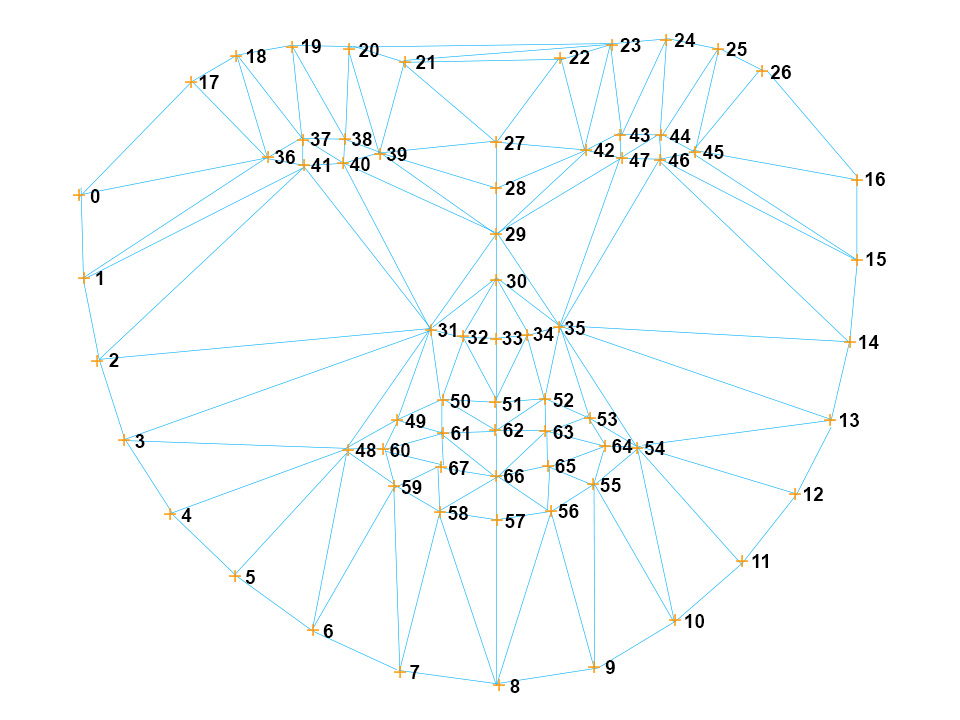

vertices (float[])

A list of vertex positions in the form of [x, y, x, y, ..., x, y], length is therefore: 68 * 2

Getting the position of a certain vertex would be like:

var vx = vertices[index * 2]; // where index is eg. 27 for the top of the nose.

var vy = vertices[index * 2 + 1];

triangles (int[])

The vertices can be connected and triangles can be formed.

This is the vertex index list for the triangles

int the following form: [i0, i1, i2, i0, i1, i2, ... , i0, i1, i2] where 3 indices span a triangle.

points (Vector<Point>)

Same as vertices, but a list of points in the form of [{x, y}, {x, y}, ..., {x, y}], length is therefore: 68

Getting the position of a certain point would be like:

var px = points[index].x; // where index is eg. 27 for the top of the nose.

var py = points[index].y;

refRect (Rectangle)

This is the reference rectangle that the face tracking algorithm uses to perform the tracking.

↑ topcandideVertices (float[])

The candide 3 model vertices. Can be used to visualize the 3D position, scaling and rotation.

↑ toptranslationX (float)

The x position of the origin of face on the image. The origin is right behind vertex index 27 (top of the nose).

↑ toptranslationY (float)

The y position of the origin of face on the image. The origin is right behind vertex index 27 (top of the nose).

↑ toprotationY (float)

A radian angle. Rotation around the Y axis (yaw, turning head to the left/right)

↑ toprotationZ (float)

A radian angle. Rotation around the Z axis (roll, tilt head to the left/right)

↑ topBRFMode

BRFv4 can operate in either of the following modes.

FACE_DETECTION (String)

Set this mode if you want to skip the face tracking part and only detect face rectangles. Point tracking can also be done synchronously.

↑ topFACE_TRACKING (String)

Set this mode if you want the full face detection and face tracking. Point tracking can also be done synchronously.

↑ topPOINT_TRACKING (String)

Set this mode if you want to skip face detection and face tracking and only track points.

↑ topBRFState

A BRFFace can be in one of the following states.

FACE_TRACKING_START (String)

The BRFFace found a face in the image data and tries to align the face tracking.

↑ topRESET (String)

The BRFFace triggered a reset, either because of a call to BRFManager.reset() or because it exceeds the reset parameters set in BRFManager.setFaceTrackingResetParams();

↑ top